"Magic" LLM Models, Real IRS Penalties

What Tax Form Errors Reveal about the Risks of Blindly Trusting LLMs — and What It Means for AI Diligence

As I evaluate AI start-ups, one trend that’s surprised me is how many treat it as a magic, silver bullet that’s the solution to all the software development needs for the start-up, from MVP to production at scale (!). Early-stage start-ups’ claims include that their products will use LLMs to perform various tasks, but how realistic are these claims?

This blog post will help you evaluate a start-up’s claims about its LLM capabilities, as there is a lot of hype surrounding them; no LLM or technical expertise required! I’ll evaluate how LLMs and other software solutions do in processing IRS Form 1040 to help you build intuition about what’s too good to be true, especially in industries where the response has to be 100% correct, or there’s risk of a fine/lawsuit/audit/death. (I’m much less concerned about the use of LLMs for content creation, where the cost of sending a less engaging email or blog post is low.)

We’ll see how poor the baseline performance is for LLMs (and most of the other solutions), contrary to many of the (early-stage start-up) pitches I hear about LLMs, which claim that things “just work” and require no additional human expertise. As a result, no examples or guardrails are provided to the LLMs to help us evaluate the claims that LLMs perform well from the outset.

Executive Summary: Orange Flags to Watch Out For in Diligence

When evaluating how LLMs and other software solutions perform in processing IRS Form 1040, we’ve identified three key areas to explore in diligence (with your team or with the help of an expert) whenever a start-up discusses the following in their pitch decks or pitches, shows in roadmaps, or mentions in hiring plans.

LLMs are not ready for prime time when an exact answer is required and/or the consequences of getting the answer wrong (such as a lawsuit, audit, fine, or cost) are high. Does the team think otherwise?

Even non-LLM open-source or free solutions that have been around for a while may not be suitable for a start-up's use case, when an exact answer is required and/or the consequences of getting the answer wrong (such as a lawsuit, audit, fine, or cost) are high. Has the team identified a concrete software and implementation strategy for solving their specific use case?

Custom development focused on improving the underlying model architecture, it’s akin to a PhD thesis! This process can take years with no guarantees of success, and does not include the time it would take to make the implementation customer-facing or scalable (!). How realistic is the team strategy (from hiring to execution) for custom development?

Use Case: Extracting Fillable Fields from IRS Form 1040

We’ll see how well LLMs compare to other approaches for extracting the input and text fields of the IRS Form 1040. I chose the IRS Form 1040 for this example because:

It’s been around since 1914 (!), and everyone is familiar with the form because they’ve used it at some point in their lives to file taxes.

The PDF format has been around since the 1990s, and many developers have worked on solutions to process it, yet they may still need work.

I wanted to demonstrate that LLMs are not yet ready for prime time when you need an exact answer! I focused on a regulated industry where the risk of getting an incorrect response has tangible monetary consequences, such as an audit and a fine by the IRS. As consumers, we also don’t want to pay taxes on $1 million if we only made $10,000!

It’s relatively easy to verify it manually, rather than relying on vibe checking.

Later, if we decide to build a “tax co-pilot”, we’ll need to incorporate and address the evolving tax code as part of our tax strategy.

Ideally, I’m looking for a solution to:

Identify a template that can return the same number of entries, regardless of how many fields are filled in.

Get all the entries correct – miss nothing -- especially if the field is an identifier, like an SSN, PIN, account number, etc.

No hallucinations!

Open-source or free to try.

Simplicity over complexity, in case we need to debug the codebase, especially at scale.

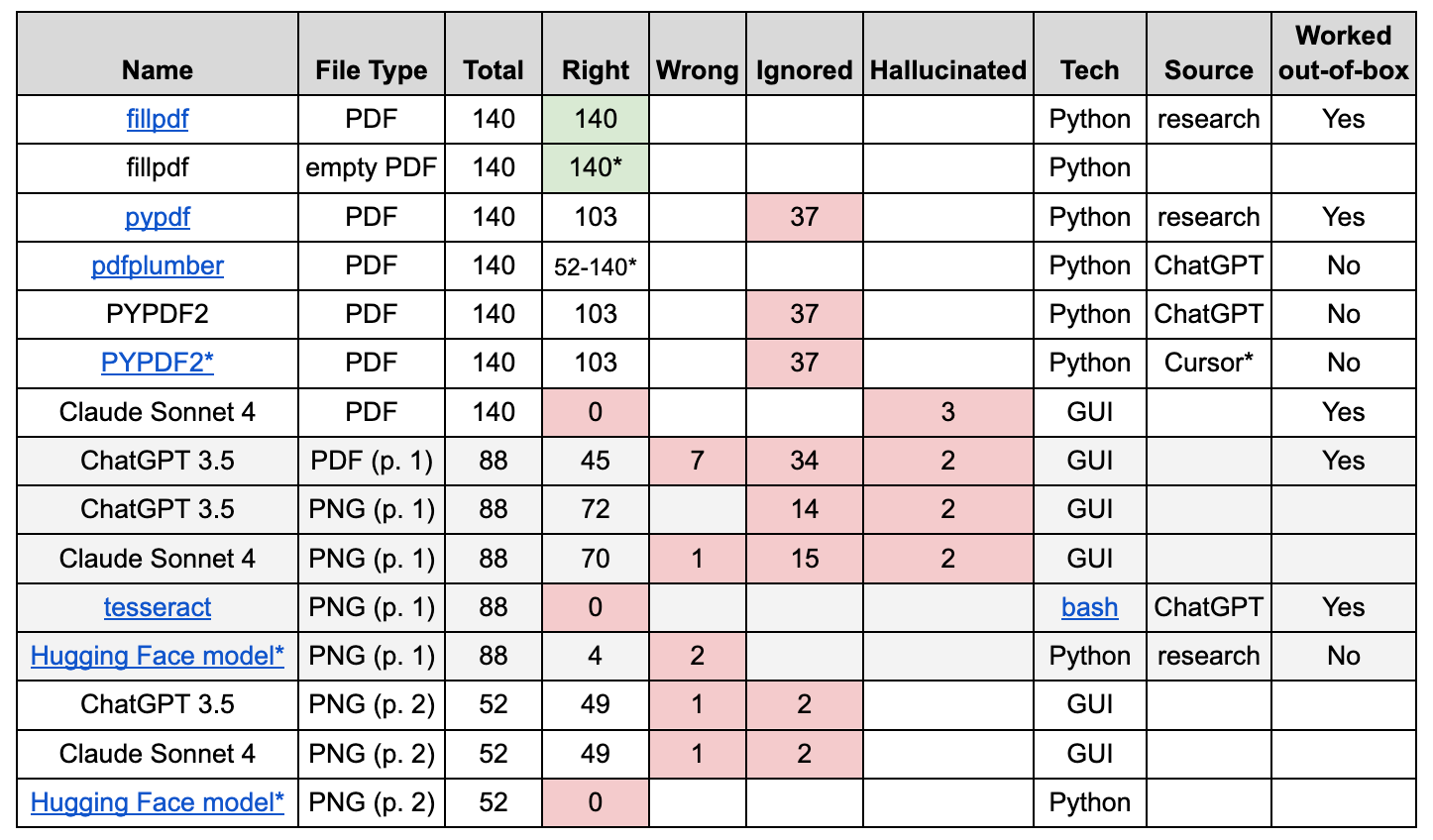

TL;DR: LLMs Will Get You Fined! While I expected LLMs not to perform as well as other solutions, which was the primary motivation for this blog post, I was surprised by just how spectacularly some of the LLMs (and some of the other solutions) failed, as shown in the table below.

For example, in processing the first page of the PDF, ChatGPT:

Hallucinated that the field “first name and middle initial” is two fields!

Decided that out of all the 8 filled-in fields in the sample form (shown below), only “married filing jointly” was selected, ignoring the 7 other entries!

Made up or forgot about entries in the income-related fields, getting only 11 out of 31 of the entries correct here!

Background on Ways to Process PDFs

There are a few ways to handle PDFs, by treating the PDF as a:

Super flexible data format (but is it too flexible?);

Image format, from which we will extract text via Optical Character Recognition or OCR;

SVG format, which we will skip, as this is a less standard process.

In the case of Form 1040, we’re most interested in the text the user enters in the form, e.g., the form values, rather than all the information in the PDF.

Hackers on Hacker News do point out that doing so “is like using a rock as a hammer and a screw as a nail…”, e.g., don’t expect much!

We’ve evaluated the most common LLM (e.g., no-code or Graphical User Interface (GUI)) and Python-based solutions to handle these tasks. These were either obtained through Google and Stack Overflow research (including finding a Hugging Face model trained to handle the 2023 version of the IRS Form 1040) or those suggested by ChatGPT and Cursor.

Code that works out-of-the-box means I can copy and paste the snippet suggested by the chatbot or code repository into Python/Terminal, as instructed, and only need to change the file name to get the code to run. (Once I got it working end-to-end to process one form, it worked on another form of the same PDF/PNG format.)

Test Scenarios to Evaluate Consistency

Empty Form 1040 (2024 version) from the IRS website, saved as a PDF.

Completely filled-in form with all the boxes checked or filled in with made-up entries, saved as a PDF.

Page 1 of the completely filled-in form (case #2 above) saved as an image in a PNG file

Page 2 of the completely filled-in form (case #2 above) saved as an image in a PNG file

To help us compare the performance of implementations, the 2024 version of Form 1040 has 140 fields where the user can add information: 88 fields on page 1, 52 fields on page 2, with 37 checkboxes between the two pages. (I don’t count the two signature and two date fields, or cell #30 that’s “reserved for future use”, though it’s interesting to see which solutions pick up on these fields and which ignore them.)

Evaluating Solutions – or Why LLMs Will Get You Fined!

Because fillpdf, created by Tyler Houssian based on this StackOverflow exchange, focuses on extracting “interactive form fields” – all the fields we’re interested in – this specialized solution works the best for our use case, regardless of the form being empty or completely filled-in, which is not true of other solutions tested. The other solutions, because they are more general (from parsing PDFs to parsing any files), tend to perform worse at this task, which needs more context to do better.

To the surprise of no one, Deep Learning models (which include ChatGPT and Claude LLMs, as well as the Computer Vision Hugging Face model) either missed text fields or, worse, hallucinated additional content.

*The Hugging Face model is an image recognition model trained on (I assume only on) the first page of the 2023 IRS Form 1040. One of the first differences between 2023 and 2024 is the addition of two “Filing Status” fields, which disrupt the positioning (and hence accuracy) of the remaining text fields in the remainder of the form. As a result, I didn’t expect this model to generalize well to the 2024 version or the second page of the form, since there were no examples of this in the 2023 repository.

While multiple start-ups told me that Claude seems to be better at processing their PDFs, ChatGPT was able to handle this format, whereas Claude assumed that the form was empty.

It is interesting, but not surprising, that ChatGPT’s results for what information was in the text fields differed between PDF and PNG formats; the same was true of Claude. Both LLMs thought that the fields “first name and middle initial” were two different fields. Both LLMs (and other solutions) struggled in detecting checkboxes.

ChatGPT and Claude also struggled with key identifiers and got them wrong. Claude struggled with a name, PINs, and an account number! ChatGPT struggled with a different PIN and half of the income breakdowns.

While pdfminer identified 140 input fields in the form, it’s hard to verify whether it correctly identified the 37 checkboxes (along with other entries), even with the visual debugger for the package, due to the output format.

If I were vibe checking this, I would call it good. To conduct a more thorough evaluation, I would need to create at least one tax form per cell to verify the accuracy of the detected value, which I’ll leave as an exercise for the reader. :)

This package was the only one to detect that I had accidentally added a line to the preparer’s ID (PTIN).

Only fillpdf and Claude detected the empty cell #30 that’s “reserved for future use,” which speaks to a degree of generalizability to other IRS Form 1040 versions.

tesseract performed poorly because it doesn’t extract values from boxes, but instead aims to extract all the text from the page.

Outside of the content the solutions tried to extract, it was interesting to see that:

ChatGPT and Cursor both recommended a deprecated package, PYPDF2, which has been deprecated since 2016.

Claude was the only one to conclude that, based on the content provided in the completely filled-out form, this is a sample form, not one that I would submit to the IRS.

Every time an LLM suggested a package, I verified its legitimacy before attempting to run the code.

As Alex Samson points out, you still “have to understand the code logic [and] manage the codebase structure.” When iterating with Cursor, which generated a novel in multiple genres when all I needed was a tweet, I used a snippet it created and wrote a few lines of code to get it to run.

I was OK with OpenAI and Anthropic having access to my sample IRS form; I would not be comfortable letting it process my personal tax forms or having access to my customers’ (if I were a start-up offering tax strategy services).

Advice for Diligence

As we saw in the table above, not all software solutions are created equal when an exact answer is required to solve the problem!

Had fillpdf not existed, the start-up would have to try to develop its own solution, which founders may pitch as one of the following three strategies:

Figure out how to process the flexible PDF format themselves.

Notice that, in the case of the PDF format, many people have been working on it since before 2005, and it remains a work in progress.

Tuning a (relatively small) subset of the billions of parameters to get an LLM to perform better on their problem. The more exact an answer the LLM should provide, and the higher the cost of getting the answer wrong, the more work (including tuning, guardrails, and training data-related tasks) needs to be done.

I would argue that this is analogous to a Master’s thesis, which can take at least 12 months, with no guarantees of success, and does not include the time it would take to make the implementation customer-facing or scalable (!); or

Iterate on the algorithm's underlying architecture (with or without additional parameter tuning), resulting in a new “foundational model”.

This is akin to a PhD thesis! This process can take years with no guarantees of success and does not include the time it would take to make the implementation customer-facing or scalable (!).

If a startup is considering either of the latter two scenarios, look out for a hiring plan that includes ML Engineers Specializing in LLMs, AI Engineering roles, or similar.

I would further argue that a start-up’s go-to-market or scaling strategy should not be based on the success of multiple graduate-level initiatives, as there is no guarantee of how long it will take (if at all) to yield results, or that the code will be ready for real-time customer-facing recommendations! After all, it has taken us more than 50 years of text processing research (and billions of dollars) to get to ChatGPT (and similar models).

Based on this discussion, here are some key points to consider evaluating in diligence.

Time-to-Market

Do start-ups have the runway to develop custom solutions to handle what they perceive as customer problems?

[You may need an expert to help you.] Are there workarounds they can implement that provide more value now, in shorter time frames, than a graduate-level thesis?

[You may need an expert to help you.] Meet with the team to have them walk everyone through their more detailed vision, scope, execution, hiring strategy, and budget for the LLM/ML/AI use case(s).

AI/Technology

Is this the right AI solution for the problem the team is trying to solve?

Is an exact answer required, and/or are the consequences of getting the answer wrong (such as a lawsuit, audit, fine, or cost) high?

[You may need an expert to help you.] If the team is using (or plans to) use LLMs (or other algorithms), how will they prevent hallucinations and incorrect answers?

[You may need an expert to help you dive into this in more detail.] Ask what the team is using to process customer inputs to evaluate their concrete software and implementation strategy for solving their specific use case.

[You may need an expert to help you.] Evaluate how the team is (re)training, validating, and (not vibe) testing the model(s).

How does the team address reproducibility (of answers/results)?

[You may need an expert to help you dive into this in more detail.] Something broke; what happens next?

How does the team handle on-call?

Costs

Are the LLMs self-hosted?

Have the team discuss Data Privacy? Or is selling Data part of the revenue model?

How much are the cloud computing costs per month? What does this include?

Scalability

[You may need an expert to help you.] Consider bringing a PDF (or a common customer use case) to evaluate how well the product solves a problem it claims to do end-to-end on data that it has not seen before.

Good Luck!

You May Also Like